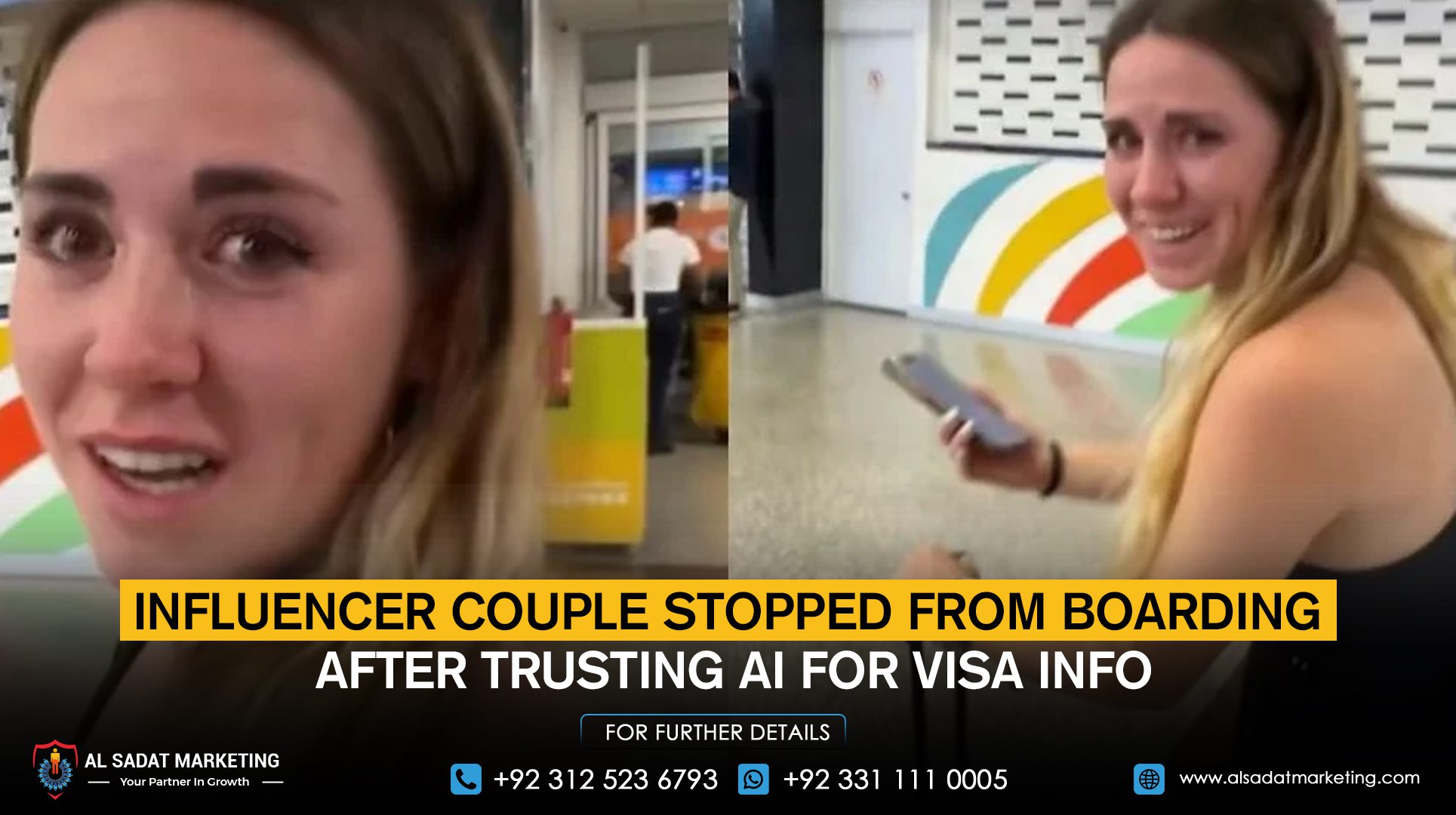

A Spanish influencer couple says they were stopped from boarding a flight to Puerto Rico after following wrong travel advice from an artificial intelligence chatbot. Caldass, one half of the couple, explained that she asked the AI tool about visa requirements and was told none were needed. “I always research a lot, but I asked the chatbot, and it said no,” she said in a TikTok video that has now gained over 6 million views.

The couple appeared emotional in the footage, with Caldass even suggesting the chatbot might have given misleading information as “revenge” for insults she had previously directed at it. Social media reaction was mixed, with many users criticising them for not checking official sources. “Natural selection, I suppose. If you are travelling across the ocean and you rely solely on a chatbot, you got off lightly,” one comment read. Others pointed out that while Spanish citizens do not need a visa for Puerto Rico, they must have an approved Electronic System for Travel Authorization (ESTA) before arrival, since the island is a U.S. territory.

In a separate case in the United States, a 60-year-old man was hospitalised for three weeks after replacing regular table salt with a toxic chemical on the advice of an AI chatbot. The man, who had no history of mental illness, developed hallucinations, paranoia, severe anxiety, and other symptoms. Doctors later diagnosed him with bromism, a rare condition caused by excessive intake of sodium bromide, a chemical once used in sedatives but now mainly found in swimming pool cleaning products.

Medical staff reported that the man arrived at the hospital claiming his neighbour was trying to poison him. A later test of the same AI chatbot showed it was still recommending sodium bromide as a salt substitute without any warning about its dangers. The case has raised further concerns about the safety of relying on AI systems for sensitive or life-impacting advice.